Deleting large volumes of data from a SQL Server table looks simple, but it can cause real problems if done carelessly.

A single large DELETE can:

- Blow out the transaction log

- Hold locks far longer than expected

- Block other workloads

- Increase recovery time if something goes wrong

When you’re cleaning up historical data or running routine maintenance, deleting rows in batches is usually the safest and most predictable approach.

This post shows a simple, production-safe pattern for deleting data in batches, and highlights the things that matter when you run it on a live system.

Why Delete in Batches

Large deletes are expensive because SQL Server treats them as one big transaction.

Deleting in batches helps because it:

- Keeps transactions small

Reduces the chance of log exhaustion and long rollbacks. - Reduces transaction log pressure

Especially important in full recovery. - Releases locks more frequently

Other workloads get a chance to run. - Gives you control over impact

You decide how aggressive the operation is.

It’s not about speed at all costs. It’s about controlling risk.

Basic Batch Delete Pattern

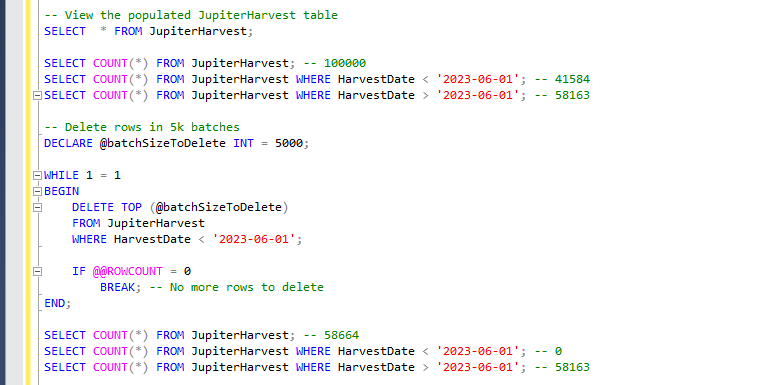

The pattern below deletes rows in fixed size chunks until there is nothing left to remove.

-- Delete data in batches

DECLARE @BatchSize INT = 5000;

WHILE 1 = 1

BEGIN

DELETE TOP (@BatchSize)

FROM dbo.JupiterHarvest

WHERE HarvestDate < '2023-01-01';

IF @@ROWCOUNT = 0

BREAK;

END;This loop deletes 5,000 rows at a time until no qualifying rows remain.

The batch size is small enough to avoid large transactions, but large enough to make steady progress.

Making the Pattern Safer in Production

The core loop rarely changes, but two small additions make a big difference on busy systems.

Delete rows in a predictable order

Without an ORDER BY, SQL Server can delete any qualifying rows. That can cause uneven locking and unnecessary page churn.

If you are deleting time-based data, delete the oldest rows first.

Change only the DELETE statement, add ORDER BY:

-- Add ORDER BY to delete statement for better performance

DELETE TOP (@BatchSize)

FROM dbo.JupiterHarvest

WHERE HarvestDate < '2023-01-01'

ORDER BY HarvestDate;This keeps the operation predictable and works best when the predicate column is indexed.

Throttle the loop when needed

On busy systems, even small batches can add pressure.

Adding a short pause between batches gives SQL Server time to recover.

Add this inside the loop, after the DELETE:

-- Add a one second delay

WAITFOR DELAY '00:00:01';A one-second delay is often enough to reduce pressure on:

- The transaction log

- Disk I/O

- Concurrent workloads

You are trading runtime for stability, which is usually the right decision.

Transaction Log Considerations

Batch deletes reduce log pressure, but they do not eliminate it.

Things to keep in mind:

- Full recovery requires working log backups

If log backups are failing, batching will not save you. - Long-running loops can still fill the log

Especially if deletes run faster than backups. - Log monitoring matters

Watch usage while the delete is running.

👉 How to Check Transaction Log Space Usage in SQL Server

Use this to monitor log usage during large maintenance deletes.

Locking and Concurrency

Even with batching, deletes still take locks.

Practical guidance:

- Run deletes during low traffic windows

- Ensure the WHERE clause is indexed

- Avoid broad or non-selective predicates

- Test on production-like data first

Batching reduces how long locks are held, not whether they exist.

Index and Fragmentation Impact

Large deletes almost always fragment indexes.

After a significant clean-up:

- Review fragmentation levels

- Rebuild or reorganise where it makes sense

- Do not blindly rebuild everything

👉 SQL Server Script: Show Database Growth Events

Useful for understanding how deletes and follow-up maintenance affect file growth.

Optional Safety Improvements

In real environments, you often layer in extra control:

- WAITFOR DELAY between batches to reduce pressure

- Progress logging to a table

- Explicit error handling around the loop

- Execution via SQL Agent with defined schedules and alerts

These depend on system criticality. The core pattern stays the same.

Final Thoughts

Batch deletes are not clever, but they are reliable.

If you need to remove large volumes of data safely, predictably, and with time to react when something looks wrong, this pattern is hard to beat.

It’s one of those techniques every DBA ends up using sooner or later.

Leave a Reply